Compared to CPU, is GPU the darling of Moore's Law?

NVIDIA's strong rise in 2016, GPGPU (GPU Universal Computing) contributed.

There are many reasons why 2016 is the year of the GPU. But in fact, in addition to the core areas (deep learning, VR, autopilot), why using GPUs for general purpose computing is still vague.

To figure out what the GPU does, start with the CPU. Most people are no strangers to computer CPUs, which may be attributed to Intel – as a supplier that has virtually monopolized PCs and server platforms for nearly a decade, Intel’s huge advertising spending directly leads to everyone’s or more Or have heard of Intel's various levels of products from notebooks to supercomputers.

The CPU is designed for low latency processing of multiple applications. The CPU is ideal for multi-tasking tasks such as spreadsheets, word processing, web applications, and more. Thus, the CPU is traditionally the preferred computing solution for most enterprises.

In the past, when the company's IT department manager said that it would order more computing devices, servers, or enhance the performance of the cloud, they generally think of the CPU.

Although it is a versatile, the number of cores that a CPU chip can carry is very limited. Most consumer chips have only eight cores. As for Intel's enterprise product line, in addition to the "freak" Xeon Phi designed for parallel computing, mainstream Xeon products (E3, E5, E7 series) have a maximum of 22 cores.

It took decades for the CPU to evolve from a single core to today's multicore. Extending a chip with such a complicated CPU is extremely technically difficult and requires a combination of aspects such as reducing transistor size, reducing heat generation, and optimizing power consumption. The performance of today's CPUs is largely due to years of hard work by Intel and AMD engineers. So far, there is no third PC CPU supplier in the world that can compete with them. It shows their technical accumulation and the technical difficulty of developing CPU.

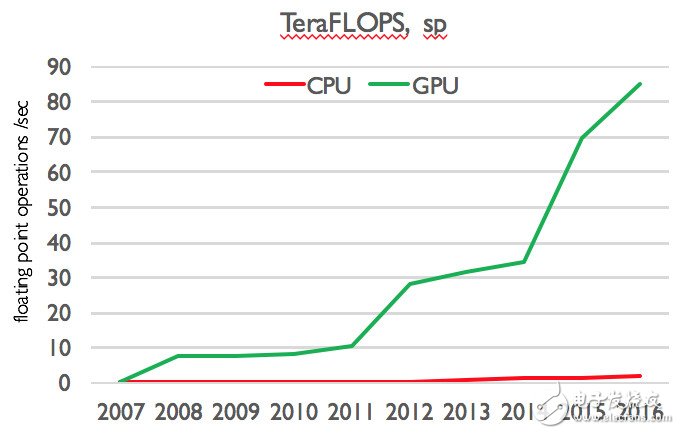

Is the GPU the darling of Moore's Law?In terms of FLOPS, the CPU has about 20% performance improvement per year (Lei Feng.com: Controversy here). And this is for highly optimized code.

With the slowdown in CPU performance (Lei Feng Network Note: In particular, the chip process technology has been slow in recent years. The limit of silicon-based chips is about 7nm, and the new technology to replace silicon is not yet mature), and its data processing capabilities are increasingly Not the speed of data growth. To make a simple comparison: IDC estimates that the world's data growth rate is about 40%, and is accelerating.

Simply put, Moore's Law is now over, and the data is growing exponentially.

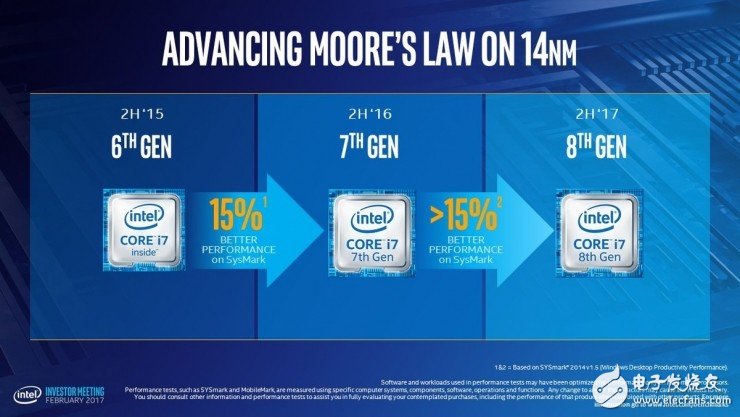

Intel Skylake, Kabylake, Coffelake Roadmap

The consequence of faster data growth than CPU performance is that people have to use various techniques to avoid computational performance bottlenecks such as downsampling, indexing, or expensive scale-out tactics to avoid long waits for the system. Respond.

The data unit we are facing now is exabytes and is moving towards zetabytes. TB, which used to be extremely large, is already very common in the consumer space. Pricing for enterprise-class Terabyte storage has fallen to single digits (US dollars).

At this price, the company saves all the acquired data, and in the process, we generate a working set that is sufficient to overwhelm the CPU-level data processing capabilities.

What does this have to do with the GPU?The architecture of the GPU is very different from the CPU. First, the GPU is not versatile. Second, unlike the core number of consumer CPU single digits, consumer GPUs typically have thousands of cores—especially for large data sets. Since the GPU was designed and had only one purpose: to maximize parallel computing. Each generation of process reductions directly leads to more cores (Moore's Law is more obvious for GPUs), meaning that GPUs have about 40% performance improvement per year—for now, they can keep up with the data explosion.

Comparison of CPU and GPU performance growth with TeraFlops beams

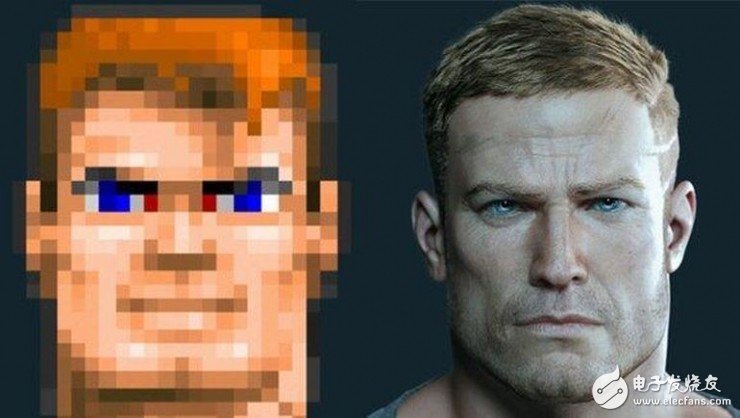

The beginning of the GPUIn the 1990s, a group of engineers realized that polygon image rendering on the screen was essentially a task that could be processed in parallel—the color of each pixel can be calculated independently, without considering other pixels. So the GPU was born and became a more efficient rendering tool than the CPU.

In short, due to the lack of CPU rendering capabilities in the image, the GPU was invented to share this part of the work, and has since become the hardware dedicated to this.

With thousands of simpler cores, the GPU can efficiently handle tasks that make the CPU very difficult. With the right code, these cores can handle very large mathematical operations and achieve a realistic gaming experience.

But one thing to point out is that the power of the GPU comes not only from the increased number of cores. Architects realize that the processing power of the GPU needs to be matched by faster memory. This allows researchers to continuously develop higher bandwidth versions of RAM memory. Today, the GPU's memory bandwidth has been on the order of magnitude compared to the CPU, such as the leading edge memory technology GDDR5X, HBM2, and GDDR6 under development. This gives the GPU a huge advantage in processing and reading data.

With these two advantages, GPUs have a foothold in general computing.

Air Glow Blast(7000Puffs)Vape Pen

Smoke Vape,E Cigar,Vape Smoke

Shenzhen Aierbaita Technology Co., Ltd. , https://www.aierbaitavape.com