Intensive learning of artificial intelligence machine learning

There are seven main types of machine learning algorithms for artificial intelligence: 1) Supervised Learning, 2) Unsupervised Learning, 3) Semi-supervised Learning, and 4) Deep Learning (Deep Learning). Learning), 5) Reinforcement Learning, 6) Transfer Learning, 7) Others.

Today we focus on Reinforcement Learning (RL).

Reinforcement learning (RL), also known as reinforcement learning and evaluation learning, is an important machine learning method, and has many applications in the fields of intelligent control robots and analysis and prediction.

So what is reinforcement learning?

Reinforcement learning is the learning of intelligent systems from the environment to behavioral mapping, so that the reward signal (enhanced signal) function value is the largest. Reinforcement learning is different from supervised learning in connectedism learning, mainly in the teacher signal, and enhanced by the environment. The enhanced signal is an evaluation of the quality of the action (usually a scalar signal), rather than telling the reinforcement learning system RLS (reinforcement learning system) how to produce the correct action. Because the external environment provides very little information, RLS must learn by its own experience or ability. In this way, RLS gains knowledge in an action-evaluation environment and adapts the program to the environment.

In layman's terms, when a child learns to be confused or confused, if the teacher finds that the child's method or idea is correct, he or she will give positive feedback (reward or encouragement); otherwise, give him or her negative feedback (lesson or Punishment), motivate the child's potential, strengthen his or her self-learning ability, rely on his own strength to actively learn and explore, and finally let him or her find the right method or idea to adapt to the external and changing environment.

Reinforcement learning is different from traditional machine learning. It can't be labeled immediately, but only gets a feedback (a prize or a penalty). It can be said that reinforcement learning is a kind of supervised learning with mark delay. Reinforcement learning is developed from theories of animal learning and adaptive control of parameter perturbations.

Strengthen the learning principle:

If an agent's behavioral strategy leads to a positive reward (enhanced signal), then the agent's tendency to generate this behavioral strategy is strengthened. The goal of the Agent is to find the optimal strategy in each discrete state to maximize and reward the desired discount.

Reinforcement learning regards learning as a process of tentative evaluation. Agent selects an action for the environment. After the environment accepts the action, the state changes, and at the same time, an enhanced signal (a reward or punishment) is sent to the Agent. The agent re-intensifies the signal and the current state of the environment. Choosing the next action, the principle of choice is to increase the probability of being positively strengthened (award). The selected action not only affects the immediate enhancement value, but also affects the state of the environment at the next moment and the final enhancement value.

If the R/A gradient information is known, the supervised learning algorithm can be used directly. Since the action A generated by the enhanced signal R and the Agent has no explicit functional form description, the gradient information R/A cannot be obtained. Therefore, in the reinforcement learning system, a certain random unit is needed. Using this random unit, the Agent searches in the possible action space and finds the correct action.

Reinforced learning model

The reinforcement learning model includes the following elements:

1) Policy: A rule defines how an Agent behaves in a specific environment at a specific time. It can be regarded as a mapping from an environmental state to a behavior, usually represented by π. Can be divided into two categories:

Deterministic policy (DeterminisTIc policy): a=Ï€(s)

The stochastic policy (StochasTIc policy): π(a|s)=P[At=a|St=t]

Where t is the time point, t=0, 1, 2, 3, ...

St∈S, S is a collection of environmental states, St represents the state of time t, and s represents one of the specific states;

At∈A(St), A(St) is the set of acTIons in state St, At represents the behavior of time t, and a represents one of the specific behaviors.

2) reward signal: reward is a scalar value, which is the signal returned to the agent according to the behavior of the agent in each TIME step. reward defines the performance of the behavior in this scenario, the agent can be based on Reward to adjust your own policy. Commonly used R to represent.

3) Value function: Reward defines the immediate benefit, while value function defines the long-term benefit. It can be regarded as the cumulative reward, which is usually expressed by v.

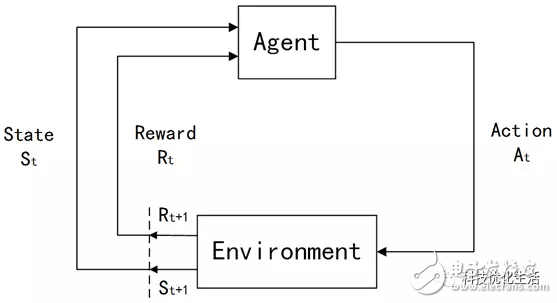

4) a model of the environment: The process of interaction between the entire Agent and the Environment can be represented by the following figure:

As the learning system, the Agent obtains the current state information St of the external environment Environment, takes the heuristic behavior At to the environment, and obtains the environmental feedback Rt+1 and the new environmental state St+1. If an action At of the Agent causes a positive reward (immediate reward) of the environment Environment, then the trend of the Agent to generate this action will be strengthened; on the contrary, the trend of the Agent generating this action will be weakened. In the repeated interaction between the control behavior of the reinforcement learning system and the state and evaluation of the environmental feedback, the mapping strategy from state to action is continuously modified in a learning manner to achieve the purpose of optimizing system performance.

Storage Inverter,Energy Storage Inverter,Bi Directional Inverter,PV Bi Directional Inverter

Jinan Xinyuhua Energy Technology Co.,Ltd , https://www.xyhenergy.com