Turing Prize Winner Raj Reddy: Rethinking Artificial Intelligence in a Historical Perspective"

Carnegie Mellon University School of Computer Professor, Turing Award winner Raj Reddy

On the morning of May 31st, Raj Reddy, a professor at the Computer College of Carnegie Mellon University and the Turing Award winner, visited Microsoft Research Asia and brought a wonderful lecture titled "Re-examining Artificial Intelligence: A Historical Perspective." . Prof. Reddy recounted the achievements of computer science and artificial intelligence in the past 60 years from the perspective of history, responded to the public's doubts about the “artificial intelligence threat theoryâ€, and made a forecast for the future “ultra-intelligenceâ€. This article is a textual lite version of the speech.

More than 60 years ago, our industry pioneer pioneered new research fields in computer science and artificial intelligence. But until today, people still have a lot of misunderstandings and fears about artificial intelligence, thinking that artificial intelligence will replace humans and rule the world. In the face of these doubts, I believe that only by understanding where we come from, where we are, and where we will go in the future will we not be confused about these misconceptions about artificial intelligence.

Artificial intelligence will never rule the world. On the contrary, since artificial intelligence can become the "superpower" of every human being, human beings are equal and no one will be superior to others because of the use of artificial intelligence. If we trace back the development of science and technology, we will find that the development of artificial intelligence is similar to any previous technological progress. The difference is that we have several million times more databases than before. These databases are ours. The basis of all the achievements we have achieved today—whether it is machine text translation, speech translation, or machine quizzes—these creative breakthroughs cannot be separated from the strong support of data and computing power.

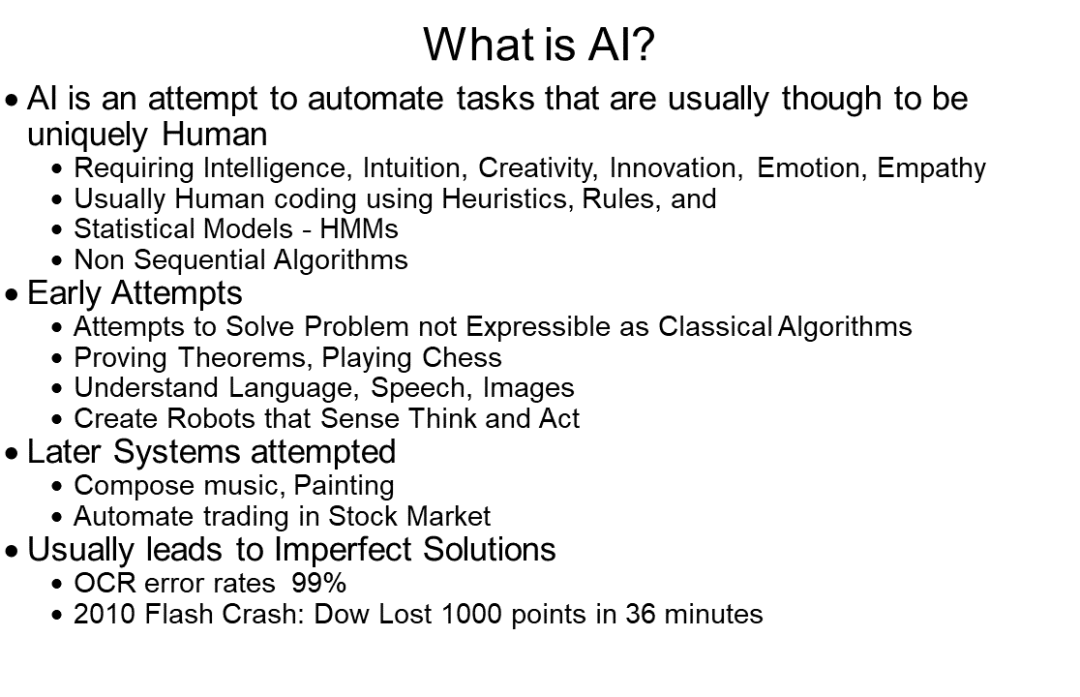

Sixty years ago, when my tutor John McCathy first proposed the concept of "artificial intelligence" at the Dartmouth conference in 1956, the heart of the time was to make computers as intelligent as humans to help people accomplish some tedious tasks. People have more time to pursue their favorite careers.

In fact, machines can help people accomplish some tasks 60 years ago, such as addition, subtraction, multiplication, and division. So people continue to ask, can the machine help us with other tasks? Scientists began to try, Turing said he believes that the machine can have some intelligence, Arthur Samuel began to study how to let the computer learn to play chess. So machine learning emerged in the 1950s. Machine learning at that time was a simple rule study, exploring whether machines could discover, recognize, and use patterns. Therefore, in the initial stage of artificial intelligence, people discussed such issues as playing chess, playing games, and verifying theories.

Then people began to explore whether computers could have the ability to solve problems. They wrote programs to make computers try to solve some math problems. Since these problems can be solved only by exhausting all possibilities, computers are better and faster than humans.

In the first decade after Dartmouth, I was still a student at Stanford University. At that time, people thought that computers could not see, hear or say that they were not smart because every ordinary person could do it. But the facts prove that this idea is wrong. Since the second decade, we have started to research and manufacture robots, let computers speak, and we have begun research in new areas such as computer vision. We have come all the way through difficulties and there are also Many new breakthroughs.

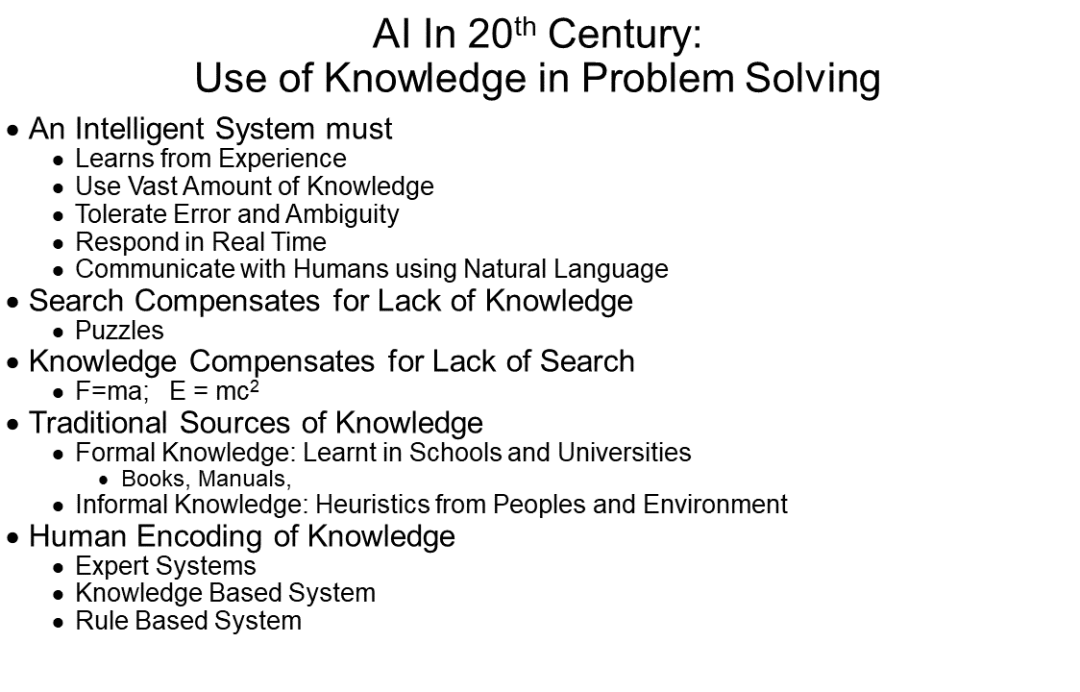

Some people think that only general-purpose artificial intelligence can solve certain common-sense problems. In fact, there is no universal artificial intelligence at all. All intelligence is applied to a particular field of intelligence.

Marvin Minsky mentioned in The Society of Mind that the intelligence of the human brain is not the whole brain working together, but is divided into thousands of regions, each with a specific Features. Brain researchers found that the brain has a region of about 1 cubic centimeter dedicated to identifying the mother's face. Of course, the brain will connect the area responsible for image recognition with the area responsible for the language. In this way, we will connect the mother itself with the word “Motherâ€, so the connection becomes the most important part of intelligence.

We hope that computers can achieve what humans can do, such as speaking, learning, and so on. Then everyone started to study expert systems, robots, self-driving cars, and so on. By the end of the 20th century, we used AI technology to achieve several tasks that were very easy or slightly difficult for humans, such as proof theory, chess, and so on. Later, we encountered a technological turning point, and we had enough computing power, storage, and bandwidth to realize the capabilities that we used to think we could never achieve.

AI can already accomplish certain tasks that are considered to be human beings. In the future, can AI accomplish what humans can't do? This is not just general-purpose artificial intelligence, but ultra-intelligent. I think this is achievable in the future. Returning to the definition of AI and computer science, I had a definition in 1965 - AI and computer science are disciplines that increase the ability of the brain. Everything that your brain can do, computers and AI can do better and faster, and even accomplish something that humans can't do.

In the 20th century, what computer scientists did was program the existing knowledge and use various methods to let the machine learn to solve human tasks. From more simple solving of math problems, playing chess, to understanding language, voice, images, to robots to draw, create music, and complete automated trading of stocks, these more creative tasks.

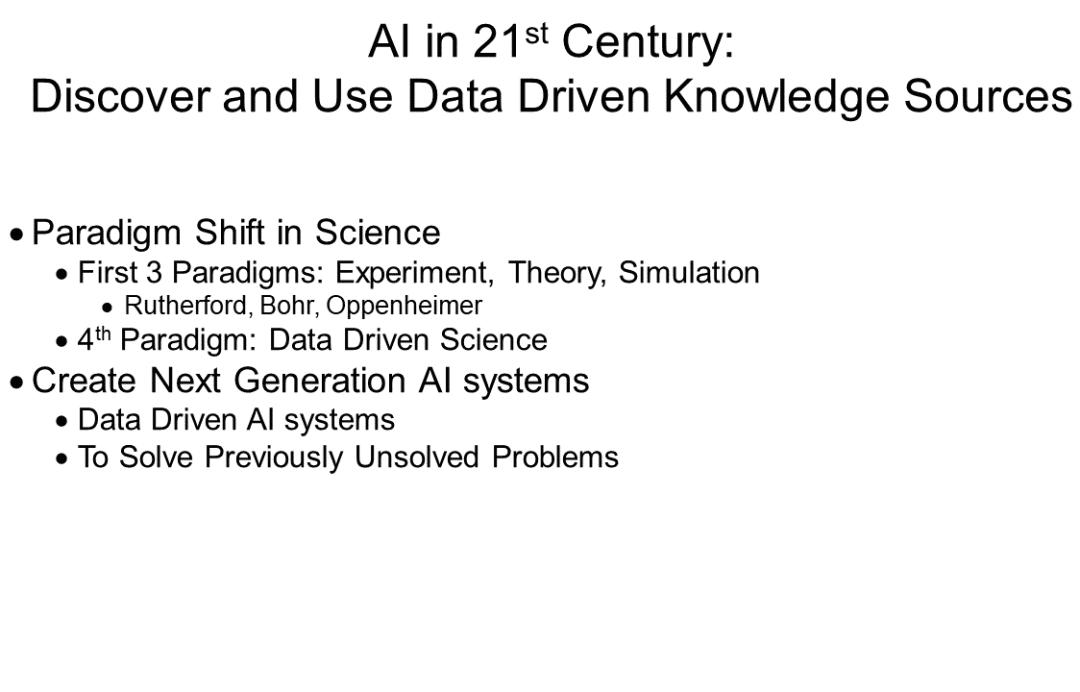

Compared with the 20th century, artificial intelligence in the 21st century has two differences:

First, we have experienced a paradigm shift. In the 21st century, a major breakthrough in computer science will be driven by big data. Microsoft Technical Fellow and Turing Award Winner Jim Gray named big data as experimental science, theoretical deduction, and computer simulation. The "fourth paradigm" after the three paradigms. Therefore, the next generation artificial intelligence system is a data-driven artificial intelligence system.

Second, most of the knowledge people use to train artificial intelligence in the 20th century comes from books. By the 21st century, we need to use artificial intelligence and big data to discover new knowledge in various industries. The huge amount of data will exceed human processing power. Artificial intelligence and machine learning will become the core of the era of big data. Perhaps one day, computers will learn to write the programs they need.

I want to give you a familiar example. There are still regional and gender inequality phenomena in China's current basic education. For example, children in the western region may find it difficult to squeeze into the pyramid of college education through the college entrance examination. In some areas, the number of women is far less than that of men and so on. California, USA Similar inequalities exist in education, and those students who come from ordinary schools will not be able to demonstrate their good academic skills as students in top schools.

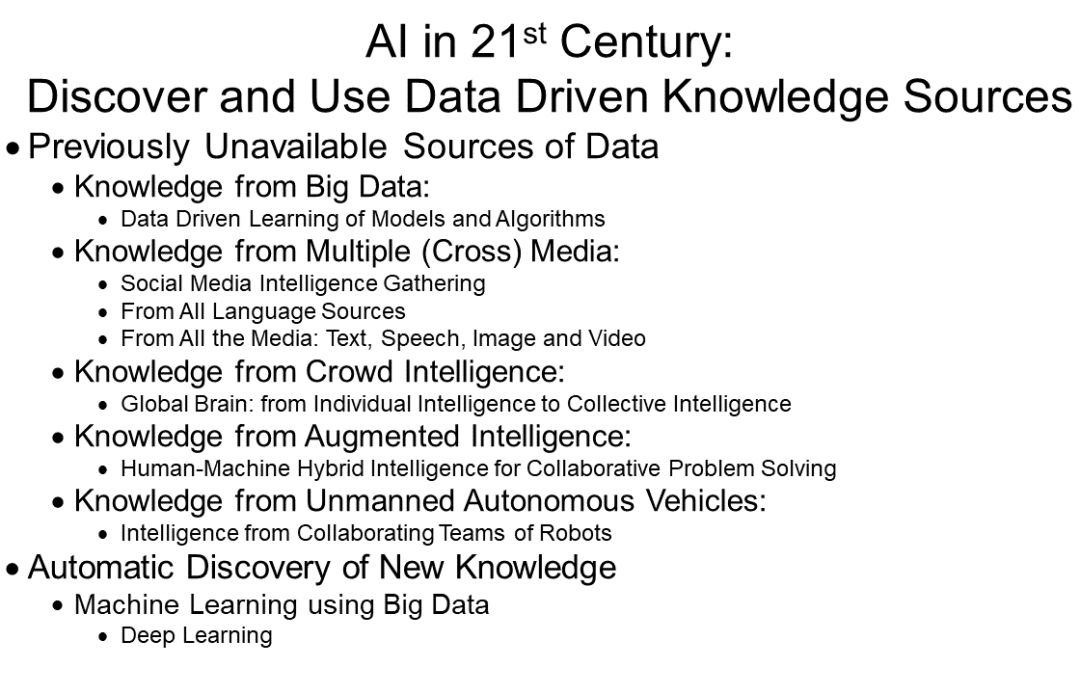

We can make a map based on big data to present and analyze this topic, making it an effective basis for government decision-making. So in the 21st century, we must find and use data-driven knowledge resources to solve problems in our society as much as possible.

So how do we use artificial intelligence to automatically discover new knowledge? We use big data today for machine learning and deep learning, but one of the problems facing deep learning at the moment is that we cannot explain how the correct answer was found. This issue may be resolved in the next 5-10 years and it will be our next task.

Finally, I want to revisit what I have achieved in the field of artificial intelligence. From 1963 to 1969, I was in the Stanford Artificial Intelligence Laboratory, where the laboratory had already begun research on robotics, languages, knowledge engineering, and computer vision. In the 60s, we invented the WYSIWYG graphics editor, PIECESOF GLASS (POG), and computational theory. In the 1970s, there was a knowledge-centered artificial intelligence, and there was an upsurge of space exploration. We are thinking about how to apply artificial intelligence to space exploration. This problem has not been solved yet. In the 1980s, we established a robotics laboratory at Carnegie Mellon University to study how robots can automatically handle their own systems. In the 1990s, robots became world chess champions and they have learned to read and understand, answer questions, and so on.

One question I have been interested in for a long time is whether artificial intelligence technology can be used by uneducated people. How do they use it? Our existing technology can be used for language translation and pronunciation. This process includes speech recognition, transcription, and translation. The translation between multiple languages ​​can already be achieved with the technical part, but it has not yet been fully realized, because there are more than one hundred languages ​​in the world where metadata is not collected, and collecting data requires a lot of time and money.

Returning to our theme, the 20th century artificial intelligence field has completed many major breakthroughs and left behind a series of thorny issues such as universal language translation, natural speech recognition, etc. I once thought that they would not be realized in my lifetime. . But in the first decade of the 21st century, these problems quickly broke through, and we had general language translations, spoken dialogues, self-driving cars, deep question answering systems, poker robot championships, and so on.

Why can we achieve these breakthroughs? Because they have all grown up with the help of big data and machine learning, computers have begun to be able to do things that humans cannot achieve. At the "calculation of the 21st century" conference in November this year, I will introduce the contents of the cognitive amplifier and the guardian angel. It is also about the computer accomplishing tasks that humans cannot accomplish. . As a simple example, assume that the earthquake triggered a tsunami. If we don’t know about it, this will be a fatal catastrophe, but if we have a guardian angel, we can predict the huge coming in 10 minutes. Waves, we can save many lives. If there is a guardian angel on MH370 flight of Malaysia Airlines and it is detected that the captain did not follow the original route, he will take control of the aircraft and land at the nearest landing. Then everyone will be saved.

So this is the future. The purpose of our technology creation is to protect human capabilities and expand human limitations. Each of us will be able to use large-scale artificial intelligence systems that are like our guardian angels that can protect humans, help humans, extend human perception and ability. Looking into the more distant future, I think we will not only have artificial intelligence and human intelligence, but will also appear superhuman intelligence. But don't forget the most important point. Because they are highly specialized intelligent machines, they will never rule the world, nor will they enslave us. thank you all!

Raj Reddy Introduction

Raj Reddy is Professor at the School of Computer Science, Carnegie Mellon University. He was awarded the Turing Award in 1994. He is a member of the National Academy of Engineering and American Academy of Arts and Sciences. He served as Co-Chair of the President’s Information Technology Advisory Committee (PITAC) from 1999 to 2001. . Dr. Reddy has more than 50 years of research experience in the areas of artificial intelligence, speech understanding, image recognition, robotics, multi-sensor applications, and intelligent agents.

Non Standard Power Supplies,400W Server Power Supply,250W Non Standard Power Supply,180W Switching Power Supply

Boluo Xurong Electronics Co., Ltd. , https://www.greenleaf-pc.com